Uncover the secrets of maximizing SEO with the powerful meta robots tag – the key to unlocking search engine success.

Image courtesy of via DALL-E 3

Table of Contents

Introduction to Meta Robots Tag

In the vast world of the internet, websites are like treasure troves filled with valuable information waiting to be discovered. But how do search engines like Google know which parts of a website to explore and show to people searching for specific things? This is where the meta robots tag comes into play – a behind-the-scenes tool that helps search engines understand websites better for an optimal user experience.

What is a Meta Robots Tag?

Think of the meta robots tag as a secret map that guides search engines through a website. It tells these search engine robots which areas to look at and which to skip entirely. By using this tag, website owners can direct search engines on how to navigate their site, ensuring that the most important content is highlighted for users to find easily.

Why is it Important?

The meta robots tag is vital for websites because it influences how they appear in search results. By using this tag effectively, website owners can improve their site’s visibility, making it easier for people to find the right information online. In the world of Search Engine Optimization (SEO), the meta robots tag plays a crucial role in helping websites stand out in search engine rankings and attract more visitors.

How Search Engines Work

Search engines like Google work by using automated programs called robots or crawlers to explore the vast expanse of the internet. These robots visit websites and follow links from one page to another, a process known as crawling.

Crawling

Crawling is like a robot exploring a maze, moving from one passage to another, reading and collecting information along the way. These robots scan the content of websites, looking at text, images, videos, and links to other sites. They gather vast amounts of data to help search engines understand what each website is about.

Indexing

After a robot crawls a website, it stores the information it gathered in databases, forming an index. This index is like a huge library catalog that helps users find what they’re looking for. When someone enters a search query, the search engine uses this index to quickly deliver relevant results.

By crawling and indexing billions of web pages, search engines aim to provide users with accurate and useful information in response to their queries. This process helps people find answers to their questions and discover new resources online.

Components of a Meta Robots Tag

A meta tag is a piece of code that provides information about a webpage. In the case of a meta robots tag, it tells search engines how to interact with the content on that page. This tag is written in the HTML of a website and is not visible to visitors. Instead, it communicates directly with search engine crawlers to guide their behavior.

Image courtesy of www.pinterest.com via Google Images

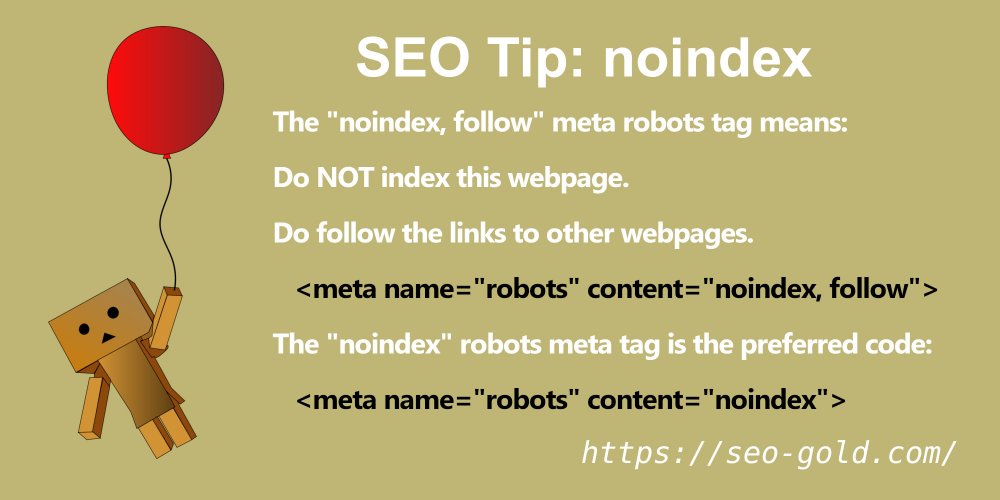

Robots Directive

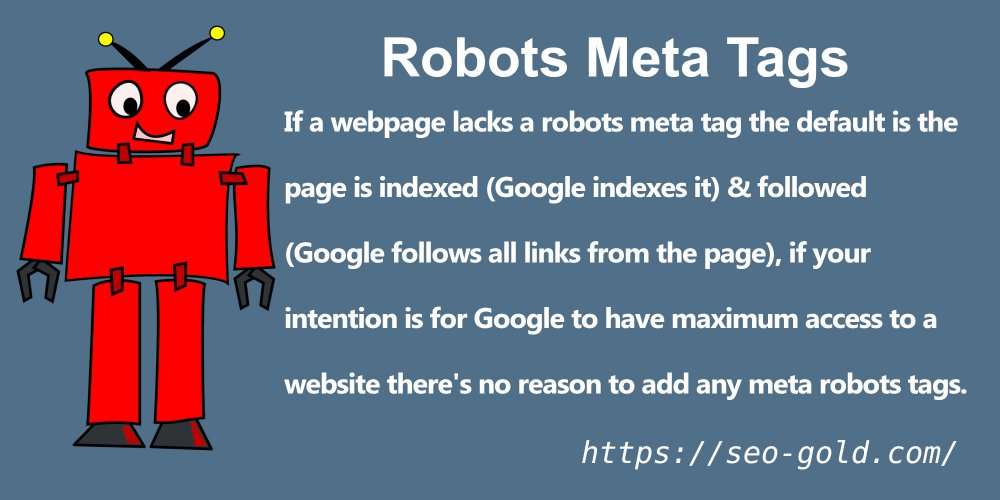

Within the meta robots tag, there are specific directives that instruct search engine crawlers on what actions to take regarding the indexed content. The most common directives include ‘index’, ‘noindex’, ‘follow’, and ‘nofollow’.

The ‘index’ directive tells search engines to include the page in their search results, while ‘noindex’ instructs them not to show the page. The ‘follow’ directive suggests that search engine bots should follow the links on the page, while ‘nofollow’ advises them not to follow those links.

Common Directives and Their Uses

When it comes to meta robots tags, there are several common directives that web developers use to communicate with search engines. Understanding these directives and knowing when to apply them can significantly impact how your website is indexed and ranked. Let’s delve into the most prevalent directives and their uses:

Index vs. Noindex

The ‘index’ directive tells search engines to include a webpage in their search results, while ‘noindex’ instructs them not to include it. If you have pages that you don’t want to show up in search results, such as private information or duplicate content, using ‘noindex’ is essential.

Follow vs. Nofollow

While ‘follow’ instructs search engine crawlers to follow the links on a webpage, ‘nofollow’ tells them not to. This is crucial when you don’t want search engines to pass link authority to certain pages linked from your site, like terms and conditions or login pages.

By carefully choosing between these directives, you can control how search engines interact with your website and optimize your online presence.

Creating a Meta Robots Tag

A meta robots tag is a snippet of HTML code that provides instructions to search engines on how to crawl and index a website. To create a meta robots tag, you need to include certain directives that tell search engine crawlers what to do.

Image courtesy of seo-gold.com via Google Images

Here’s an example of how to write a basic meta robots tag:

<meta name="robots" content="index, follow">

In this example, the directives “index” and “follow” are telling search engines to index the content of the webpage and follow any links it contains.

Adding to HTML

To add a meta robots tag to your website’s HTML, you need to include it within the <head> section of your webpage. Here’s how you can do it:

1. Open the HTML file of your webpage in a text editor.

2. Locate the <head> tag in the HTML code.

3. Insert the meta robots tag code within the <head> section, like this:

<head>

<meta name="robots" content="index, follow">

... other meta tags and elements

</head>

4. Save the changes to the HTML file and upload it to your website server.

By following these simple steps, you can create and add a meta robots tag to your website’s HTML code, giving search engines valuable guidance on how to crawl and index your content.

Testing and Viewing Meta Robots Tags

Ensuring that your meta robots tags are set up correctly is crucial for helping search engines understand your website. Testing and viewing these tags can confirm that they are guiding search engine behavior as intended. Let’s explore some ways to check if your meta robots tags are working effectively.

Using Browser Tools

To view the meta robots tag on a webpage, you can utilize browser developer tools. For example, in Google Chrome, right-click on the page, select “Inspect,” and navigate to the “Elements” tab. Look for the <meta name="robots" content="..."> tag within the HTML code to see how it is configured.

Testing Tools

There are online tools available specifically designed to test meta robots tags. These tools can analyze your website and provide insights into how search engines interpret your directives. By using these testing tools, you can ensure that the meta robots tags are set up correctly and are helping your website’s visibility.

Best Practices for Meta Robots Tag Usage

When it comes to using meta robots tags on your website, following best practices can help improve your SEO and ensure that search engines understand how to navigate and index your content properly. Here are some key tips to keep in mind:

Image courtesy of seo-gold.com via Google Images

Keep It Simple

It’s essential to use straightforward directives in your meta robots tags to avoid confusion. Stick to using common directives like ‘index’ or ‘noindex’ to indicate whether a page should be included in search results or not. Avoid using complex combinations of directives that could potentially disrupt how search engines interpret your content.

Regular Checks

Make it a habit to check and update your meta robots tags regularly. As your website evolves and new content is added, it’s crucial to ensure that your directives are still relevant and aligned with your SEO strategy. By routinely reviewing and updating your meta robots tags, you can maintain optimal performance and visibility in search engine results.

Real-World Examples

Let’s take a look at a real-world example of how meta robots tags have helped websites improve their SEO. One popular website that effectively used meta robots tags to enhance its search engine rankings is Blogging Basics 101. By strategically setting up meta robots tags with ‘index’ directives on valuable content pages and ‘noindex’ directives on duplicate or low-quality pages, Blogging Basics 101 saw a significant boost in organic traffic. This showcases the importance of properly implementing meta robots tags to guide search engine crawlers and improve overall SEO performance.

Avoiding Mistakes

On the flip side, there are also common mistakes website owners can make when it comes to meta robots tags. One common error is forgetting to update or remove outdated meta robots tags on pages that have undergone content changes. This can lead to confusion for search engines and result in pages being incorrectly indexed or excluded from search results. To avoid this mistake, it’s essential to regularly review and update meta robots tags, especially after making significant changes to website content. By staying attentive to these details, website owners can prevent detrimental impacts on their SEO efforts.

Conclusion

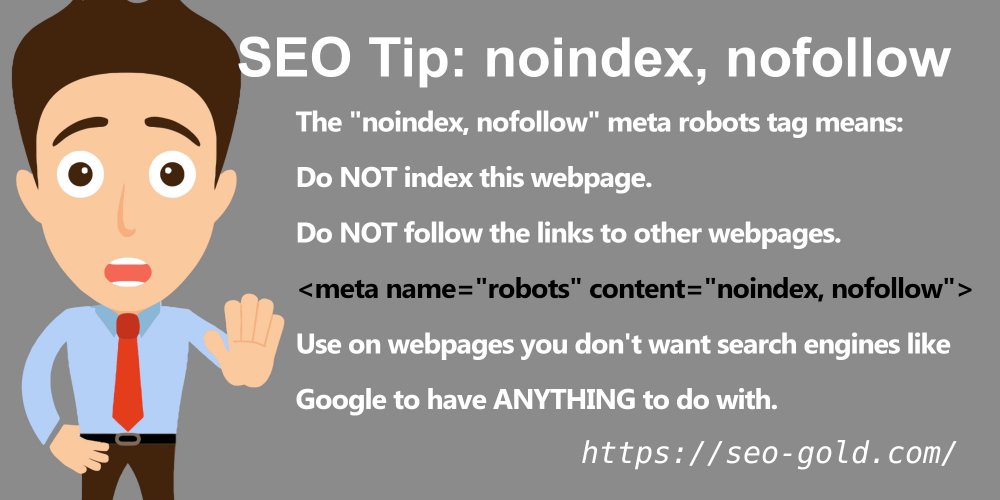

In conclusion, the meta robots tag plays a crucial role in helping websites communicate with search engines and improve their visibility in search results. By providing directives like ‘index’, ‘noindex’, ‘follow’, and ‘nofollow’, website owners can control how search engines crawl and index their content, ultimately influencing their online presence.

Image courtesy of seo-gold.com via Google Images

Key Takeaways

It is important to remember that the meta robots tag helps search engines know which parts of a website to look at and which to ignore. By using simple and clear directives, website owners can optimize their SEO efforts and ensure that their content is easily discoverable by users searching online. Regularly checking and updating meta robots tags is key to maintaining optimal performance and staying competitive in the digital landscape.

Frequently Asked Questions (FAQs)

What Does ‘Noindex’ Mean?

The ‘noindex’ directive in a meta robots tag tells search engines not to include a specific page in their search results. This is useful for pages that you don’t want people to find when they search online.

Can I Use Multiple Directives?

Yes, you can use multiple directives in a meta robots tag. For example, you could use ‘noindex, nofollow’ to tell search engines not to show the page in search results and not to follow any links on that page.

How Often Should I Update My Meta Robots Tags?

It’s a good practice to regularly review and update your meta robots tags, especially if you make changes to your website or add new content. This ensures that search engines are still correctly indexing and showing your pages in search results.