Unlock the mystery behind the X robots tag and discover how it can impact your website’s search engine optimization strategy.

Image courtesy of via DALL-E 3

Table of Contents

Welcome, young readers! Today, we’re going to talk about something called the X-Robots-Tag and why it’s important for websites. Have you ever wondered how search engines like Google find and show you websites when you search for something online? Well, the X-Robots-Tag plays a crucial role in helping search engines understand and organize website pages. Let’s dive into what exactly the X-Robots-Tag is and why we should care about it.

What is the X-Robots-Tag?

The X-Robots-Tag is like a little message that website owners can add to their web pages. This message tells search engines whether they are allowed to show that page in search results or not. It helps search engines know which pages to read and include in search results and which ones to ignore. So, in simple terms, the X-Robots-Tag acts as a way for website owners to talk to search engines and say, “Hey, show this page” or “Hey, don’t show this page.”

Why Should We Care About the X-Robots-Tag?

Imagine you have a secret diary that you don’t want anyone to read except for yourself. The X-Robots-Tag is like a lock on that diary that only you have the key to. It helps you control who can see certain parts of your website and keeps some pages private from search engines. This way, you can decide which information gets shared with the world and which stays hidden. Understanding how to use the X-Robots-Tag gives you power over what search engines can see on your website, making sure your content is displayed just the way you want it to be.

Understanding Search Engines

What is a Search Engine?

A search engine is like a giant library on the internet. When you type something into the search bar, it helps you find websites and information related to what you’re looking for. Popular search engines include Google, Bing, and Yahoo!

What Does ‘Crawling’ Mean?

Search engines have special programs called crawlers or spiders that visit websites to read and collect information from all the pages. This process is known as crawling. It’s like the search engine is exploring and mapping out the internet to understand what’s out there.

How X-Robots-Tag Works

Let’s imagine the X-Robots-Tag as a secret code that you can place at the entrance of a website page. This code, when included in the HTTP header of a webpage, tells search engine robots how to behave when they arrive at that page. It’s like giving these robots specific instructions on what to do next.

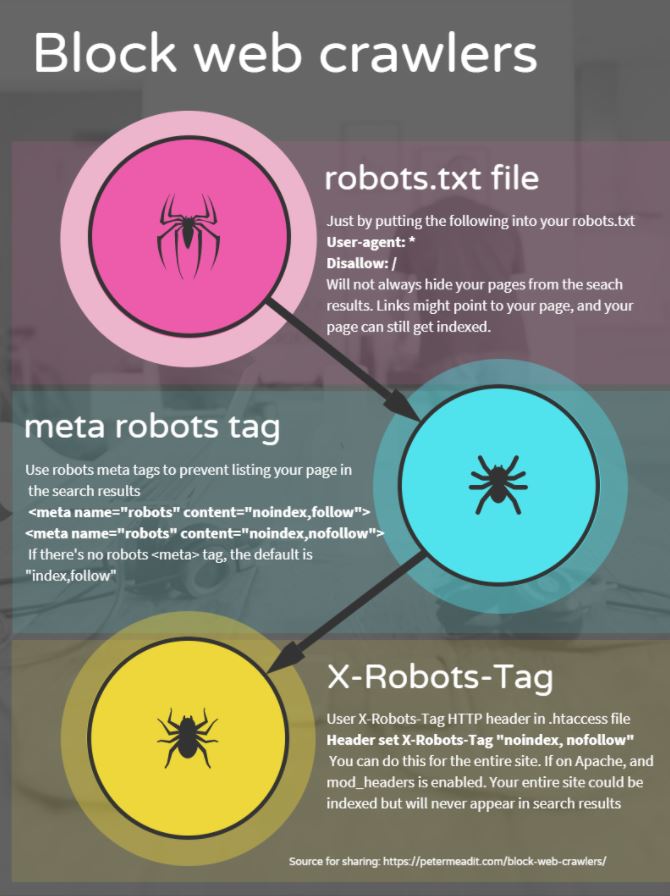

X-Robots-Tag vs. Meta Tags

Meta tags are like little notes hidden within the code of a webpage, telling search engines more about that page. The X-Robots-Tag is similar, but it focuses solely on instructing search engine robots. While meta tags provide general information, the X-Robots-Tag is more specific in telling robots what actions to take regarding indexing or following links. Depending on the goal you have for your webpage, you may choose to use one or both of these tools to communicate with search engines effectively.

Using X-Robots-Tag

When using X-Robots-Tag, there are specific directives you can include to control how search engines interact with your website. These directives help communicate to search engines what actions they should take regarding indexing specific pages. Some of the common directives include:

Image courtesy of www.semrush.com via Google Images

- noindex: This directive tells search engines not to index the page, meaning it will not show up in search results.

- nofollow: When this directive is used, search engines will not follow any links on that page.

- noarchive: With this directive, search engines are instructed not to store a cached copy of the page.

Examples of X-Robots-Tag Use

To better understand how X-Robots-Tag is implemented, let’s look at a couple of examples. Imagine you have a page on your website that contains sensitive information that you don’t want to be indexed by search engines. By including the directive noindex in the X-Robots-Tag, you can prevent that page from appearing in search results.

On the other hand, if you have a page with external links that you don’t want search engines to follow, you can use the nofollow directive in the X-Robots-Tag to achieve this. By doing so, search engines will not crawl those external links present on that particular page.

Benefits of X-Robots-Tag

The X-Robots-Tag is like a secret code that website owners can use to tell search engines which pages to look at and which to ignore. Imagine you have a big box of toys, but you only want the search engine to see your favorite ones. With the X-Robots-Tag, you can pick and choose which pages show up in search results, giving you better control over how your website appears online.

Enhanced Privacy

Privacy is like having a secret diary that you only want to share with your closest friends. Some pages on a website might contain personal information or special secrets that you want to keep private. By using the X-Robots-Tag, you can make sure that these pages stay hidden from prying eyes, keeping your secrets safe and secure.

Challenges of Using X-Robots-Tag

When it comes to using X-Robots-Tag on your website, there are some common mistakes that people often make. One common mistake is misconfiguring the directives, such as using ‘noindex’ when you actually want a page to be indexed, or ‘nofollow’ when you want search engines to follow the links on that page. It’s important to double-check and understand the implications of each directive before implementing them.

Image courtesy of petermead.com via Google Images

Troubleshooting

If you encounter issues with the X-Robots-Tag implementation on your website, don’t panic. There are ways to troubleshoot and fix these problems. One common troubleshooting step is to check the syntax of your X-Robots-Tag directives to ensure they are written correctly. Additionally, you can use tools provided by search engines to validate your directives and see how they are being interpreted.

X-Robots-Tag Best Practices

One of the best practices for effectively using X-Robots-Tag on your website is to regularly monitor its implementation. By keeping an eye on how search engines are interacting with your directives, you can ensure that the right pages are getting indexed or excluded as intended. Regular monitoring can help you catch any errors or oversights early on and make necessary adjustments to optimize your website’s visibility.

Keep Updated with Search Engine Guidelines

It is essential to stay informed about the latest search engine policies and guidelines regarding the use of X-Robots-Tag. Search engines frequently update their algorithms and ranking criteria, so it is crucial to align your website’s directives with these guidelines to maintain optimal visibility and control over indexing. This will help you stay ahead of the curve and ensure that your website is fully optimized for search engine visibility.

Conclusion

In conclusion, the X-Robots-Tag is a powerful tool that allows website owners to control how search engines interact with their pages. By using this tag effectively, website owners can influence which pages get indexed and how they are displayed in search results. Let’s recap the key points covered in this article:

Image courtesy of smartkeyword.io via Google Images

Key Points:

1. The X-Robots-Tag is a directive that provides instructions to search engines on how to crawl and index website pages.

2. By using directives like ‘noindex’, ‘nofollow’, and ‘noarchive’, website owners can control which pages are indexed and how they appear in search results.

3. X-Robots-Tag can help enhance privacy by preventing sensitive pages from being indexed by search engines.

4. While the X-Robots-Tag offers many benefits, there are also challenges such as implementation errors that website owners may face.

5. Best practices for using X-Robots-Tag include regularly monitoring its effectiveness and staying updated with search engine guidelines.

Understanding and effectively utilizing the X-Robots-Tag is essential for website owners looking to optimize their presence in search engine results. By following best practices and avoiding common mistakes, website owners can take full advantage of this tool to improve their visibility online.

Frequently Asked Questions (FAQs)

What happens if I don’t use X-Robots-Tag correctly?

If you don’t use the X-Robots-Tag correctly on your website, search engines may have unrestricted access to all your pages. This could lead to sensitive or duplicate content being indexed, affecting your website’s search engine rankings. It’s important to use the X-Robots-Tag directives wisely to control what search engines can and cannot see on your site.

Can I use X-Robots-Tag with meta tags?

Yes, you can use X-Robots-Tag alongside meta tags on your website. While meta tags provide information to browsers about how to display your web pages, X-Robots-Tag gives instructions specifically to search engine bots on how to index and crawl your site. By utilizing both meta tags and X-Robots-Tag, you can enhance the visibility of your website in search engine results while maintaining control over what content is shown to users.