Unlock the mystery of crawlability and discover how it impacts your website’s visibility and ranking on search engines.

Image courtesy of via DALL-E 3

Table of Contents

Introduction to Crawlability

Welcome, young reader, to the fascinating world of crawlability! Have you ever wondered how search engines find the information you are looking for on the internet? Well, crawlability plays a crucial role in making sure web pages can be easily discovered by search engines like Google, Bing, and more.

What is Crawlability?

Think of a search engine as a big library with lots of books. Just like a librarian needs to organize and catalog all the books so that people can find them, search engines use something called crawlability to explore and categorize all the information available on the internet. Crawlability is like the process of mapping out the library shelves so that the books can be easily found.

Why is Crawlability Important?

Crawlability is super important because it ensures that the web pages you create can be seen by others when they search online. Imagine if your favorite storybook was hidden in a secret room in the library – you wouldn’t be able to share it with your friends! Crawlability helps make sure that your web pages are visible to everyone who wants to find them, just like making sure your favorite book is on the library shelves for everyone to read.

How Search Engines Work

Search engines like Google use special programs called robots, also known as crawlers, to explore the internet. These robots are like helpful little creatures that visit websites and gather information to bring back to the search engine.

Indexing the Web

Once the robots collect information from websites, they store and organize it in a big database. This database is like a massive library where all the information from the web is kept. When you search for something online, the search engine quickly looks through this library to find the most relevant websites for you.

Search engines sort through millions of web pages to find what you’re looking for. The better a webpage is organized and easy to understand, the more likely it is to show up in your search results!

The Path of Crawling

When search engine robots, also known as crawlers, start their journey through the vast internet, they need directions to find all the hidden treasures – your web pages! Just like following a map to find a buried treasure, these crawlers follow links from one webpage to another. Each link they encounter leads them to a new page, allowing them to index and store information about your website.

Image courtesy of www.marketingprofs.com via Google Images

Understanding URLs

Imagine URLs as the unique addresses of each webpage on the internet. These addresses act like a street sign, guiding crawlers to the exact location of your web content. By understanding URLs, crawlers can efficiently navigate through the internet to find and index your web pages. So, having clear and descriptive URLs is like having helpful signs that lead search engine crawlers straight to your content!

Factors Affecting Crawlability

A website’s structure plays a crucial role in determining how easily search engine crawlers can navigate and index its content. Just like a well-organized library makes it simpler to find books, a well-structured website helps crawlers efficiently discover and categorize web pages. When a website is logically organized with clear navigation menus and internal linking, crawlers can easily move from page to page, ensuring all valuable content is indexed.

Using Robots.txt

Robots.txt is like a virtual “Keep Out” sign for search engine crawlers. It is a file placed in the root directory of a website that instructs crawlers on which pages they can or cannot access. By using the robots.txt file strategically, website owners can guide crawlers to focus on important pages while preventing them from indexing sensitive or duplicate content. This helps improve crawlability by directing crawlers to the most relevant areas of a website.

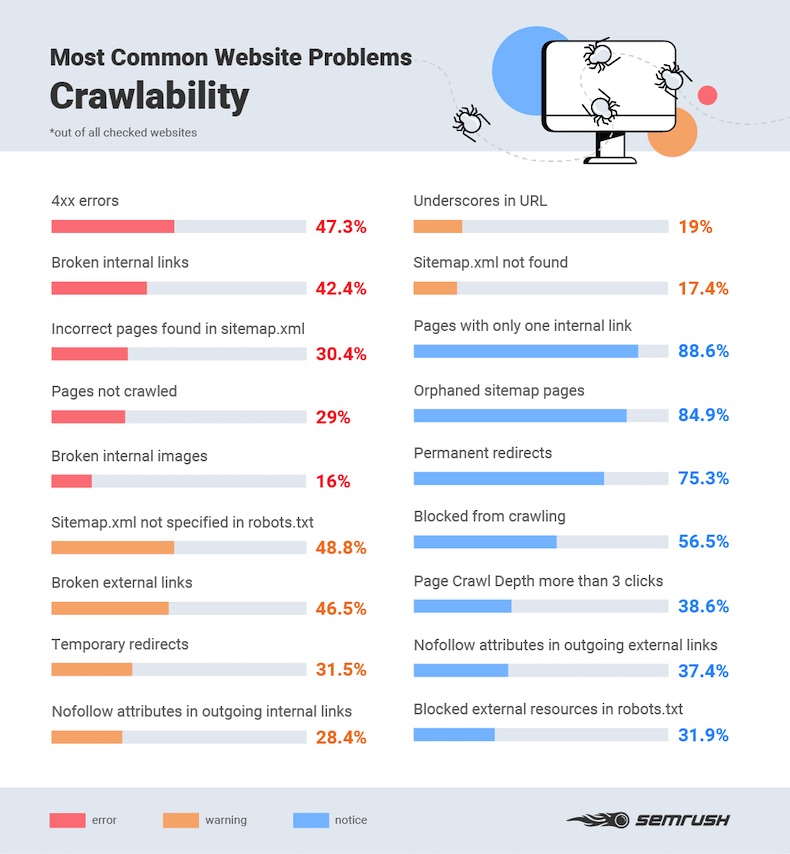

Creating a Sitemap

Think of a sitemap as a blueprint that guides search engine crawlers through the intricate maze of a website. A sitemap is a file that lists all the URLs on a site, making it easier for crawlers to discover and index pages. By creating and submitting a sitemap to search engines, website owners ensure that all pages are easily accessible to crawlers, enhancing the overall crawlability of the site.

Avoiding Broken Links

Broken links are like dead ends for search engine crawlers – they prevent crawlers from navigating through a website efficiently. When a crawler encounters a broken link, it hits a roadblock and may not be able to index the content beyond that point. To maintain good crawlability, website owners should regularly check for broken links and fix them promptly. By eliminating broken links, crawlers can smoothly navigate a website, ensuring all pages are properly indexed.

Improving Crawlability

Improving crawlability is crucial for ensuring that your website gets noticed by search engines and appears in search results. By following some best practices, you can make it easier for search engine crawlers to navigate and index your site. Here are some tips to enhance the crawlability of your website:

Image courtesy of www.marketingprofs.com via Google Images

Regular Updates

One of the best ways to improve crawlability is by regularly updating your website with fresh content. Search engine crawlers are more likely to revisit your site when they detect new content, which can help improve your site’s visibility in search results.

Mobile Friendliness

With the increasing use of mobile devices for browsing the internet, it’s essential to ensure that your website is mobile-friendly. A mobile-responsive design not only enhances the user experience but also makes it easier for search engine crawlers to navigate your site.

Quality Content

High-quality and relevant content plays a significant role in improving crawlability and user experience. By providing valuable information that matches what users are searching for, you can increase the chances of your website being crawled and indexed by search engines.

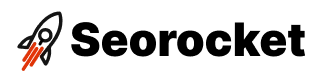

Common Crawlability Issues

Ensuring that your website is easily accessible and visible to search engines is crucial for attracting visitors. However, there are some common crawlability issues that can hinder this process. Let’s explore two of the most prevalent problems and how to address them.

Duplicate Content

Have you ever copied homework from a friend only to find out that the teacher noticed and wasn’t pleased? Duplicate content is similar to that scenario but with search engines instead of teachers. When the same content appears on multiple pages of a website or across different websites, it can confuse search engine crawlers.

To tackle this issue, make sure each page on your website offers unique and valuable content. Avoid copying and pasting text from elsewhere, and instead focus on creating original and engaging material. By reducing duplicate content, you help search engines better understand and index your website, ultimately improving its crawlability.

Slow Page Loading

Imagine waiting for your favorite game to load on your computer but it takes forever, causing frustration. Just like you wouldn’t enjoy a slow-loading game, search engine crawlers dislike sluggish websites. When pages take a long time to load, crawlers might give up or prioritize other faster sites, resulting in poor crawlability.

To combat slow page loading, optimize your website by compressing images, minifying code, and utilizing content delivery networks (CDNs). These techniques help speed up your site’s performance, making it more appealing to both users and search engine robots. By ensuring fast-loading pages, you enhance your website’s crawlability and user experience.

Tools to Check Crawlability

Ensuring that your website is easily accessible to search engine crawlers is crucial for improving its visibility online. By employing various tools, you can check and enhance the crawlability of your website effectively. Let’s explore some tools that can help you in this endeavor.

Image courtesy of www.linkedin.com via Google Images

Google Search Console

Google Search Console is a free tool provided by Google that allows website owners to monitor, maintain, and troubleshoot their site’s presence in Google search results. It provides valuable insights into how Google views your site, identifies crawl errors, and offers suggestions for improvement.

By checking the coverage report in Google Search Console, you can identify any crawl errors that may be hindering your site’s performance. You can also submit sitemaps to help Google better understand your site’s structure and ensure that all your pages are properly indexed.

Other Useful Tools

In addition to Google Search Console, there are other tools available that can help you assess and enhance the crawlability of your website. Tools like Screaming Frog SEO Spider, SEMrush, and Moz Pro offer features that allow you to identify crawl issues, analyze site structure, and monitor crawlability over time.

Screaming Frog SEO Spider, for example, can crawl your website like a search engine, analyzing key elements such as URLs, meta data, and broken links. It can help you identify issues that may be affecting your site’s crawlability and provide recommendations for improvement.

Similarly, SEMrush and Moz Pro offer in-depth site audit features that can pinpoint crawlability issues, keyword optimization opportunities, and overall site health. By utilizing these tools, you can address any crawlability challenges and optimize your website for better search engine visibility.

The Future of Crawlability

As we look ahead to the future of search engines and how they discover and index web pages, exciting advancements in artificial intelligence (AI) and machine learning are set to revolutionize crawlability.

AI-Powered Crawlers

Imagine search engine robots (crawlers) equipped with the power of artificial intelligence. These intelligent crawlers will not only be able to explore websites faster but also understand the context and relevance of content on a deeper level. By analyzing user behavior and search patterns, AI-powered crawlers will deliver more accurate and personalized search results.

The Role of Machine Learning

Machine learning plays a crucial role in improving crawlability by enhancing the algorithms that search engines use to rank and display web pages. Through machine learning, search engines can continuously adapt and learn from user interactions, leading to more relevant search results and a better overall user experience. This means that websites optimized for crawlability will not only be easier to find but also more likely to rank higher in search results.

Conclusion

In conclusion, crawlability is a crucial factor for the success of any website. By understanding what crawlability is and why it is important, website owners can ensure that their web pages are easily discoverable by search engines. Maintaining good crawlability not only helps in improving search engine visibility but also enhances the overall user experience.

Image courtesy of www.webfx.com via Google Images

Key Takeaways

To summarize, here are the key points discussed in this article about crawlability:

- Crawlability refers to how easily search engine robots can access and navigate through a website.

- Factors like website structure, robots.txt files, sitemaps, and avoiding broken links influence crawlability.

- Regular updates, mobile-friendliness, and high-quality content are essential for improving crawlability.

- Common crawlability issues include duplicate content and slow page loading times.

- Tools like Google Search Console can help website owners check and enhance crawlability.

- The future of crawlability involves advancements in AI and machine learning for smarter and more efficient crawlers.

It is important for website owners to implement best practices to ensure good crawlability, which ultimately leads to better visibility and accessibility on the internet. By staying informed about crawlability and keeping up with the latest trends, website owners can set themselves up for long-term success in the digital landscape.

Want to turn these SEO insights into real results? Seorocket is an all-in-one AI SEO solution that uses the power of AI to analyze your competition and craft high-ranking content.

Seorocket offers a suite of powerful tools, including a Keyword Researcher to find the most profitable keywords, an AI Writer to generate unique and Google-friendly content, and an Automatic Publisher to schedule and publish your content directly to your website. Plus, you’ll get real-time performance tracking so you can see exactly what’s working and make adjustments as needed.

Stop just reading about SEO – take action with Seorocket and skyrocket your search rankings today. Sign up for a free trial and see the difference Seorocket can make for your website!

FAQs

What Happens if My Site is Not Crawlable?

If your site is not easily accessible and navigable by search engine crawlers, it may not be effectively indexed and ranked in search engine results. This means that your website may not show up when people search for related topics or keywords, leading to decreased visibility and traffic.

How Often Should I Update My Sitemap?

It is recommended to update your sitemap whenever you make significant changes to your website’s structure, content, or URLs. By keeping your sitemap up to date, you help search engine crawlers better understand your website’s organization and ensure that new pages are easily discoverable.

Can I Block Crawlers from Certain Pages?

Yes, you can control which pages search engine crawlers can access by using a file called robots.txt. This file allows you to specify which parts of your website should or should not be crawled, helping you manage crawler access to specific pages and content.